通过前面的学习拿到数据问题不大,本帖是记录如何保存到文件以及保存成中文的操作。

1,bqb.py爬虫文件实例代码

'''---------------------------------

# @Date: 2023-10-25 16:39:05

# @Author: Devin

# @Last Modified: 2023-11-27 16:26:06

------------------------------------'''

import scrapy

class Myspider(scrapy.Spider):

name='bqb'

# 2,检查域名

allowed_domains=["itcast.cn"]

# 1,修改起始url

start_urls=["https://www.itcast.cn/channel/teacher.shtml"]

# 3,实现爬虫逻辑

def parse(self,response):

# 定义对于网站的相关操作

# 获取所有教师的节点

node_list=response.xpath("//div[@class='li_txt']")

# 遍历教师节点

for node in node_list:

temp={}

# xpath方法返回的是选择器对象列表

# temp["name"]=node.xpath("./h3/text()").extract_first() #防止空列表报错

temp["name"]=node.xpath("./h3/text()")[0].extract()

temp["title"]=node.xpath("./h4/text()")[0].extract()

temp["desc"]=node.xpath("./p/text()")[0].extract()

yield temp

2,pipelines.py管道代码实例

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

import json

import codecs

class DemoPipeline(object):

def __init__(self):

self.file=codecs.open("itcast.json",'wb',encoding="utf-8")

def process_item(self, item, spider):

# item,源于bqb.py的返回

# 字典数据序列化

json_data=json.dumps(dict(item),ensure_ascii=False)+",\n"

# 写入文件

self.file.write(json_data)

return item

def __del__(self):

self.file.close()

3,settings.py配置文件修改(2处)

ITEM_PIPELINES = {

# 100,管道优先级,越小优先级高

"demo.pipelines.DemoPipeline": 100,

}

FEED_EXPORT_ENCODING ='utf-8'

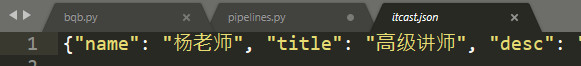

4,结果实例